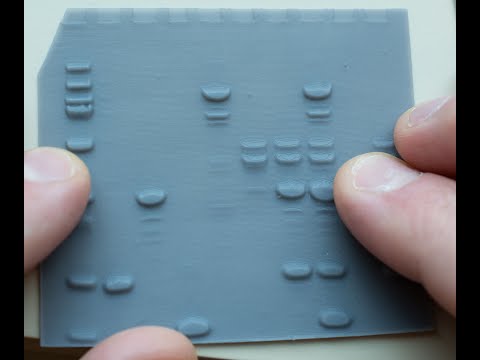

[Image above] Researchers led by Baylor University converted 2D digital images into 3D tactile graphics using a lithophane format. From left to right: Digital source image of sodium dodecyl sulfate–polyacrylamide gel electrophoresis (SDS-PAGE), frontlit lithophane, and backlit lithophane. Credit: Koone et al., Science Advances (CC BY-NC 4.0)

When students face difficulties reading a scientific paper, they often turn to the figures in hopes that the graphical representation of data can bolster their understanding. However, for students with visual impairments, traditional pictures and graphs do not add much in way of enlightenment because they cannot see the flat, 2D-printed images.

Tactile graphics—raised lines, textures, and elevated illustrations—are an alternative image format that likely would benefit students with visual impairments. Unfortunately, examples of tactile illustrations in the literature are few and far between, and the ones that exist face challenges such as low resolution, high cost, or specialized knowledge.

Thus, there is a need for a high-resolution, low-cost, and portable data format that preferably can be visualized by anyone regardless of eyesight level. “Such a format will enable blind and sighted individuals to share—to visualize and discuss—the exact same piece of data,” researchers write in a recent open-access paper.

The researchers are led by Baylor University in Texas and include colleagues from Yale University, Wedland Group, and Educational Testing Services. Their study investigated the possibility of creating tactile graphics using a lithophane.

Lithopanes are etched or molded artworks, typically less than 2 mm in thickness, traditionally made from porcelain or wax but now more commonly from plastic. The surface appears opaque in ambient “front” light, but the lithophane glows like a digital image when backlit, i.e., held in front of a light source.

Lithophanes can now be 3D printed from any 2D image by converting the image to a 3D topograph. Despite this ease of fabrication, the use of lithophanes as a universally visualized data format has never been reported, according to the researchers.

(A) Appearance of lithophane with front lighting (from overhead ceiling lights). (B) Same lithophane in (A) held up to the same ceiling light for back lighting. Credit: Koone et al., Science Advances (CC BY-NC 4.0)

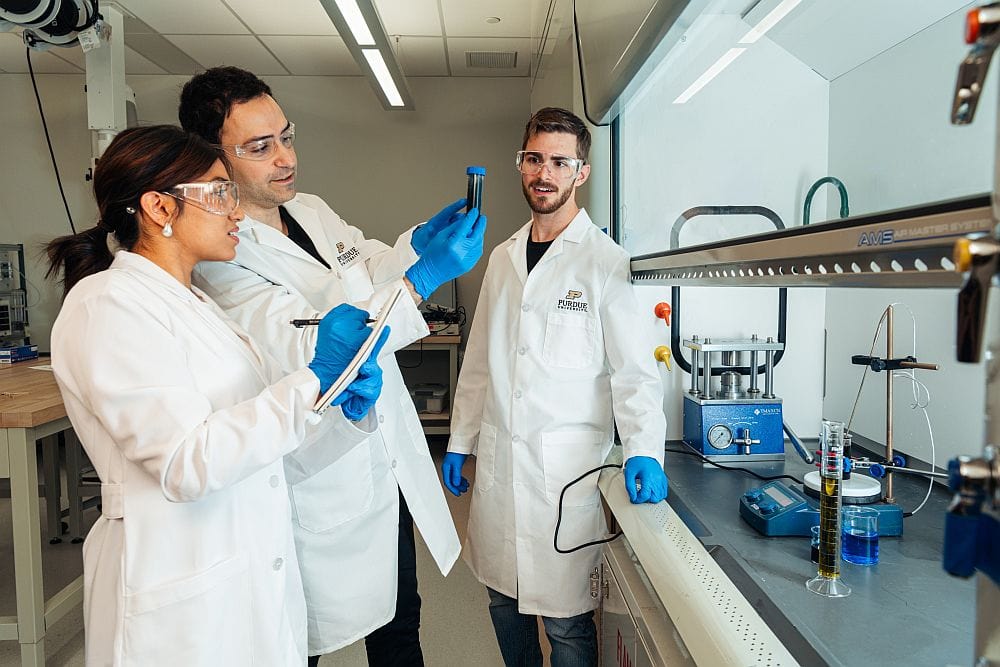

For this study, the researchers focused on creating and testing lithophanes featuring data found in the chemical sciences. They chose this topic area because while exclusion of students with visual impairments in chemistry “can be viewed as a virtue … on the basis of laboratory safety and the ‘visual’ nature of chemistry … exclusion from chemistry impedes learning in other fields that might be more inclusive,” the researchers write.

In addition, the researchers state that four of the authors have been blind since birth or childhood and are among the small group of people who earned Ph.D. degrees in the chemical sciences while being blind.

Lithophanes were created using a small commercial 3D printer and initially focused on the most common data type in biochemistry: sodium dodecyl sulfate–polyacrylamide gel electrophoresis (SDS-PAGE). Additional lithophanes highlighting other types of data were fabricated as well, including a scanning electron microscopy of a butterfly chitin scale, a mass spectrum of a protein with gas phase phosphate adducts, an electronic (ultraviolet-visible) spectrum of an iron-porphyrin protein, and a textbook-style secondary structure map of a seven-stranded β sheet protein.

The testing cohort consisted of sighted students with or without blindfolds and five people with vision impairments who have experienced total blindness or low vision since childhood or adolescence. They were asked to interpret the lithophane data by tactile sensing or eyesight.

Credit: @WacoChemist, YouTube

The average test accuracy for all five lithophanes was 96.7% for blind tactile interpretation, 92.2% for sighted interpretation of backlit lithophanes, and 79.8% for blindfolded tactile interpretation. In contrast, the accuracy of interpretation of digital imagery on a computer screen was 88.4% by eyesight.

For about 80% of questions, tactile accuracy by blind chemists was equal or superior to visual interpretation of lithophanes. The researchers speculate this disparity is attributable in part to the higher educational level of the blind cohort.

“Four of the five blind persons tested in this study have earned PhDs in the chemical sciences, whereas the sighted participants were undergraduate students enrolled in undergraduate biochemistry, who might have misinterpreted the spectrum,” they write.

Although the study did not examine chromatic data, the researchers expect that the visualization of colored data—such as heatmaps and 2D color plots—could be accomplished with lithophanes projecting a monotonic grayscale.

“This research is an example of art making science more accessible and inclusive. Art is rescuing science from itself,” says Bryan Shaw, Baylor professor of chemistry and biochemistry and lead author of the recent study, in a Baylor press release.

Shaw recently was awarded a $1.3 million grant from the National Institutes of Health for a first-of-its-kind early intervention project that opens laboratory work and provides tactile chemistry education materials and equipment.

The five-year grant—in partnership with the Texas School for the Blind and Visually Impaired (TSBVI) in Austin—will initially focus on developing a pilot program for 150 high school students at TSBVI to participate in education and train with materials on both the school campus and at Shaw’s lab at Baylor.

The pilot is expected to launch this fall, with the full program expected to begin in spring 2023 through 2027. In the future, the team hopes to scale the program to include resources for children just beginning the study of science.

The open-access paper, published in Science Advances, is “Data for all: Tactile graphics that light up with picture-perfect resolution” (DOI: 10.1126/sciadv.abq2640).

Update 9/7/2022 – Opening paragraph was modified.

Author

Lisa McDonald

CTT Categories

- Education