[Image above] Credit: Pixabay

In the past 10 years, more and more researchers are harnessing data science techniques for materials discovery and design.

Data science is an interdisciplinary field that uses scientific methods, processes, algorithms, and systems to extract knowledge and insight from noisy, structured, and unstructured data. Instead of slowly identifying new materials through trial-and-error, researchers who adopt a data science framework use advanced computer techniques such as machine learning to quickly identify new materials worth further exploration.

A challenge of using machine learning for materials design, however, is the need for a test data set on which to train the algorithm. Traditionally, research teams do not share their data with others, which makes accessing large amounts of data for training difficult. Yet even when data is available, it is rarely stored in a manner that can be used to train an algorithm.

While there are workarounds to these challenges, such as data augmentation, researchers struggle with another limitation of current machine learning methods—detecting symmetry, periodicity, and long-range order in a material’s structure.

A material’s structure plays a major role in determining the material’s properties and behavior. Unfortunately, convolutional neural networks (CNNs), a type of machine learning commonly used to analyze visual imagery, are unable to easily detect structural features such as symmetry, periodicity, and long-range order.

“In practice, to make CNNs computational tractable, it is common to add pooling layers that use a region-based summary statistic to reduce the dimensionality of the data. As a result of pooling layers, CNNs are commonly translational invariant, meaning they can detect if a feature exists in an image but cannot determine its precise location,” researchers write in a recent open-access paper.

In other words, CNNs are unable to recognize an image when it is rotated, and so will classify the rotated image as a unique object—thus preventing recognition of similarities between images taken from different angles.

Techniques such as 2D-rotational equivariant and 3D-euclidian neural networks can correct this limitation, but the fact remains that CNNs do not inherently understand symmetry. Thus, the corrective techniques generally only allow for narrow-bounded conclusions, and if a new example lies on the periphery of the training data distribution, the predictions might be nonsensical.

Currently, it is not possible to develop a neural network that inherently understands symmetry. However, improving a neural network’s ability to approximate symmetry without relying on corrective techniques is an achievable goal—and the one aimed for by the researchers of the open-access paper quoted above.

The researchers come from Lehigh and Stanford universities, and they are led by Joshua Agar, assistant professor of materials science and engineering at Lehigh. In the paper, they explain how they developed a neural network with improved symmetry awareness.

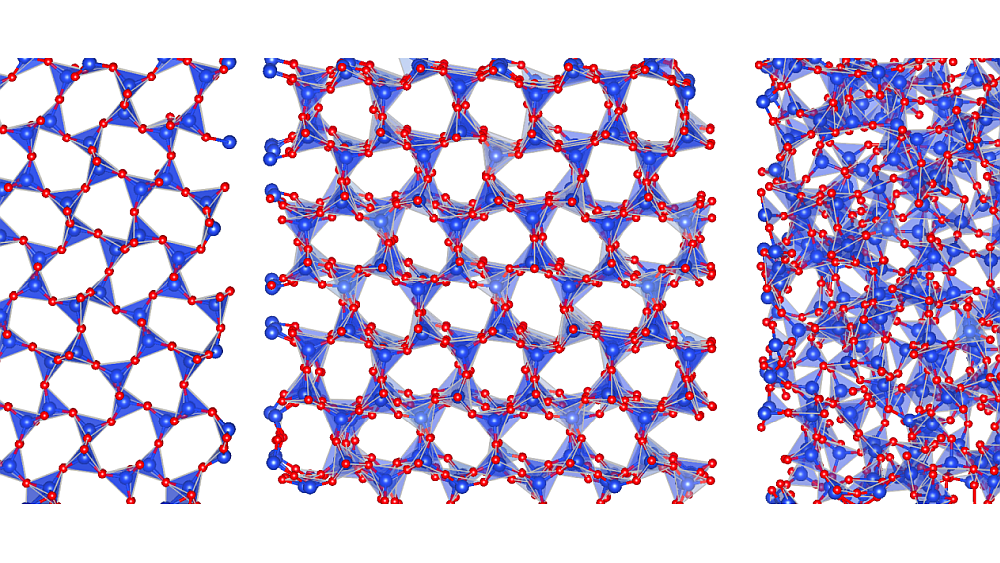

They began by developing and training two complementary neural network-based algorithms. One neural network can classify natural images, and the other can classify images that belong to wallpaper group symmetries, i.e., mathematical classifications of 2D repetitive patterns.

After training the algorithms, the researchers used manifold learning techniques to create 2D projections that correlated images based on their composition and structure, irrespective of the length scale of the images. Repeated exploration revealed more nuanced details of the image similarities.

After proving the potential of their network on the generated images, the researchers applied their neural network to piezoresponse force microscopy images collected on diverse materials systems over five years at the University of California, Berkeley. The results? The network successfully grouped similar classes of materials together and observed trends, even though it did not have an inherent understanding of the materials system, structure, or underlying symmetry.

In an email, Agar says the results of this study are exciting not only because of the successful approximations but because it offers a first “use case” of an innovative new data-storage system called DataFed.

As noted earlier, there is a lack of robust data repositories for training machine learning algorithms. The new DataFed system, which is housed at Oak Ridge National Laboratory, is meant to fill this gap.

DataFed is a federated system that manages “the data storage, communication, and security infrastructure present within a network of participating organizations and facilities,” according to the ORNL website. An interdisciplinary team at Lehigh University, called the Presidential Nano-Human Interface Initiative, played an active role in the design and development of DataFed.

Agar is a part of the Presidential NHI team, and he says the new symmetry algorithm they developed will be integrated with the DataFed system to address what he sees as the two-sided problem of data science.

“There are no suitable data repositories for collecting, collating, and searching scientific data, and there are no good tools to extract knowledge simply from scientific databases. DataFed addresses the former, and the manuscript addresses the latter,” he says.

Agar says as they work to integrate the symmetry model with DataFed, they continue refining the model to make it more user-friendly. “All of the software is being developed open-source under nonrestrictive licenses. We hope to build a community of users that can help develop features,” he says.

The open-access paper, published in npj Computational Materials, is “Symmetry-aware recursive image similarity exploration for materials microscopy” (DOI: 10.1038/s41524-021-00637-y)

Author

Lisa McDonald

CTT Categories

- Material Innovations

- Modeling & Simulation