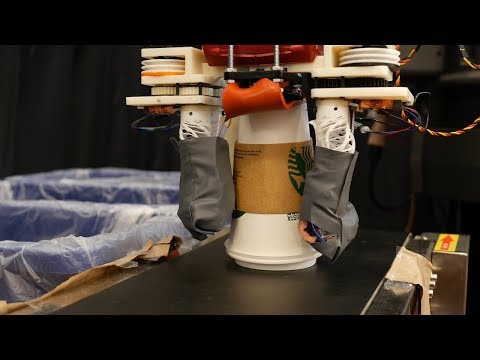

[Image above] RoCycle, a recycling robot, uses soft hands to “feel” the difference between plastic, metal, and paper. Credit: MITCSAIL; YouTube

Yesterday was Earth Day—a day to show your love and support for the only planet we call home (yet).

With concerns about climate change rising as fast as global temperatures, more and more Earth citizens are becoming aware that change is necessary if we want future generations to still be able to enjoy all this pale blue dot has to offer decades down the road.

Although broader policy changes and bigger shifts in how we exist on Earth are needed, even small actions can help—such as driving your car less often, minimizing use of plastic, and recycling.

But as I wrote a few weeks ago, there are big problems with current recycling efforts—recent shifts in material import policies in China, a country that once imported a vast proportion of the world’s recyclable materials, have highlighted major problems with how we recycle.

In fact, a shocking amount of materials are never recycled, despite the fact that they are recyclable. Today, our use of materials can more accurately be described as a materials dead end than any sort of cycle.

One of the complex challenges with recycling in the United States today is single stream recycling—mixing materials together is undoubtedly simpler for consumers, but it creates a huge challenge at sorting facilities to separate these mixed materials into pure individual material streams. The work separating materials is often done manually, meaning that it is time consuming, labor-intensive, costly, and imperfect.

To combat the labor-intensive and costly system of manual separation, some recycling facilities are employing the help of robots equipped with artificial intelligence to visually separate materials. Often these robots are based on imaging or optical sensors to separate mixed material streams (for instance, differentiating materials based on how light reflects off them).

One manufacturer of such robots, ZenRobotics, uses a combination of light spectrum sensors, high-resolution cameras, imaging metal detectors, LED lights, and/or 3D sensor systems for its material separation systems.

But imaging-based sorting technology does have its drawbacks, according to an MIT Technology Review article. “When you’re sorting through a huge stream of waste, there’s a lot of clutter and things get hidden from view,” Lillian Chin, a computational material robots researcher (and 2017 Jeopardy College Champion!) at MIT’s Computer Science and Artificial Intelligence Lab (CSAIL), says in the article. “You can’t really rely on image alone to tell you what’s going on.”

And that’s precisely why Chin and a team of researchers at CSAIL have developed a new robot that relies on a gentler touch to separate materials.

Instead of using imaging to visually separate materials, the team’s RoCycle robot uses two flexible silicone “hands” to feel the difference between paper, metal, and plastic.

The researchers designed those hands to be auxetic—meaning that when they are stretched, the structure gets thicker, not thinner (as most materials do when stretched).

That design gives the robot’s soft hands flexibility, allowing them to grasp and assess objects of various shapes and sizes. The CSAIL team even created their auxetics to have handedness, so RoCycle has a left and right hand.

“By combining handed shearing auxetics with high deformation capacitive pressure and strain sensors, we present a new puncture resistant soft robotic gripper,” the team writes in an online paper describing their work.

Credit: MITCSAIL; YouTube

The hands are connected to a Rethink Robotics Baxter robot, the same one that Du-Co Ceramics put in its ceramic production lines. [Note: Rethink Robotics announced in late 2018 that the company was folding.]

And inside the robot’s soft-touch hands are capacitive sensors that are sensitive to mechanical deformation, allowing the robot to feel the size and stiffness of an object.

These capacitive sensors, including both pressure and strain sensors, are built from layers of conductive silicone (a composite of silicone and expanded graphite), dielectric foam (silicone and sugar), and nonconductive silicone, allowing the sensors to bend along with the robot’s hands and remain functional even if punctured.

In addition to feeling how stiff an object is, the sensors also allow RoCycle to detect if an object is conductive, giving it the ability to detect electronics hidden in plastic cases, for example.

The robot uses the sensors’ estimations of an object’s size and stiffness, combined with its conductivity, to compare to actual data for different types of materials—allowing the robot to make its best guess about what material an object is made of.

“RoCycle builds on a set of sensors that detect the radius of an object to within 30 percent accuracy, and tell the difference between ‘hard’ and ‘soft’ objects with 78 percent accuracy,” according to an article on the CSAIL website.

In a stationary test of 14 different objects—including a paper cup, metal can, plastic football, and Magic 8 Ball—RoCycle was 85 percent accurate in classifying each object as plastic, metal, or paper. When the researchers tested RoCycle’s abilities on a mock recycling line, it averaged 63 percent accuracy in picking 13 objects off a conveyer belt and separating them into respective material bins.

Although the CSAIL team acknowledges that further work is needed to improve the robot’s accuracy, this preliminary work is an important first step.

“Although the classifier is not perfect in performance, any improvement to upstream sorting can have significant quality improvements downstream,” the authors write in the paper.

And with the ability to improve RoCycle’s accuracy, for instance, with additional sensors along the contact surface, there is significant potential to improve automated separation in material recycling facilities using such touch-based technologies that determine material stiffness.

One of RoCycle’s biggest drawbacks, however, is speed—vision-based systems can work much faster. According to the MIT Technology Review article, “Some ZenRobotics robots can sort 4,000 objects an hour, for example. [Harri] Holopainen [of ZenRobotics] thinks RoCycle would need to work around 10 times faster to compete.”

So why choose? The best solution might be separation systems that merge both touch-based and vision-based technologies together for the most accurate automated separation—and the researchers seem to agree.

“On a system level, this gripper system will need to be incorporated with vision-based technologies to improve accuracy and allow picking in cluttered environments,” the researchers write. “Integration with vision would also allow future systems to have a wider range of detectable objects, including glass and non-recyclable materials.”

Read more about the CSAIL team’s work in two papers the researchers posted online, here and here.

Author

April Gocha

CTT Categories

- Electronics

- Environment

- Material Innovations

Spotlight Categories

- Member Highlights